You may have skimmed this list before reading this intro and said to yourself there’s not way this can take 15 minutes. If you’re not familiar with SEO or audits in general then it could take a touch long, but when I’m reviewing a website for a client who is interested in our services, we’ll always perform one of these 15 minute audits. This is not our full audit in which we provide a report and recommendations to a clients for implementation but it does give us insights as we put together a proposal. It also allows us to invest little time while extracting the most critical SEO data points.

Why These Quick SEO Audits Are Critical

Our agency gets referrals pretty frequently. We try to help every single prospect we think we can make a difference for. But we all know that rarely occurs in the game of inbound marketing or any other form of marketing for that matter. We’re constantly lead scoring prospects and determining who can make it to the next level of engagement. Assuming the prospective client has, the execution of a quick audit allows us put together a more robust proposal, provide specific actionable solutions in our proposal, have a more robust discussion with the client, and uncover challenges most of our competitors didn’t uncover as we were going through the discovery process.

What This Is Not

As important quick SEO audits can be to companies, the line items below would slowdown its definition of being quick. These other activities can be performed in mutually exclusive audits that further added onto this quick audit if you want to go further in depth.

- Our traditional 10 – 20 hour SEO audit

- Local Search (Map List/7 Pack) Audit

- How To Go About Link Building

- Development of a content strategy

- “Deep” Keyword Research Analysis

- Recommendations on how to fix

Who This Is For

- SEO Agencies & Professionals – If you are an agency or SEO professional, this list of checkpoints are definitely for you.

- Small to Medium Size Companies – Do you want to hold your next SEO agency or even conduct a quick audit based on existing SEO efforts?

- Ecommerce Site Owners – Are you getting the most out of your online store? Are there opportunities for search enhancements?

SEO Tools Of Choice

While I was 2 seconds away from turning this into a 5,000 word case study, I think a checklist for a quick audit is more fitting. Whether you are an agency or business owner, this list of tools are what we typically use to perform our quick audit for clients. They may seem daunting, but trust me we can get a full picture of the state of search for a client within 20 minutes by utilizing these tools and going through the website parameter one step at a time.

- Google & Advanced Operators

- Google Webmaster Tools (Not Required)

- Google Analytics (Not Required)

- Google Keyword Planner

- Moz – Open Site Explorer

- Majestic SEO

- Panguin Tool

- Screaming Frog

- Google Page Speed Analysis

- CopyScape/Siteliner

Critical Steps Before Everything

Prior to performing any audit (long or quick), clear your browser cache and history. Also, ask the prospect some keyword intial questions including the keywords they want to rank for, the geographical markets they’re targeting, who their top 3 – 5 search competitors are, and finally access to their Google Webmaster Tools and Google Analytics profiles.

SEO On-Page Optimization Audit

Keyword Research (2 Minutes)

Google keyword planner is my tool of choice and should be for most. Our team will typically ask the prospect what particular keywords are most critical for ranking. We’ll only look at 10 – 20 keywords and dig into which ones are easier to rank for than other by plugging some of those keywords into Moz’s Keyword Difficulty Score Tool. Anything below 35 – 40 is fair game (depending on the domain authority).

Check Titles & Meta Descriptions

Given that from an on-page optimization perspective, Title Tags are the most critical ranking factor we’ll do 2 things:

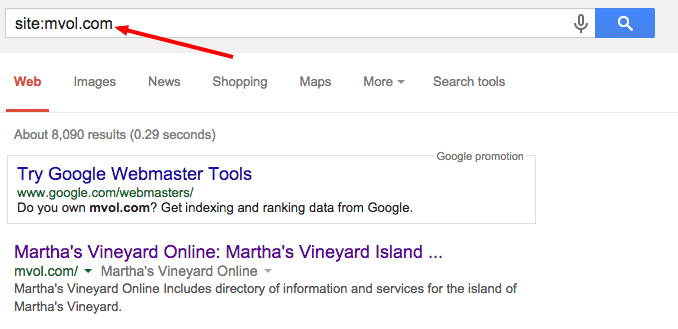

“site:website.com” – we will perform this site: search with the website in Google to see how many pages have been indexed and just as important what the page’s titles actually are. We’ll typically compare these insights with our initial keyword research and determine what opportunities exist for further optimization.

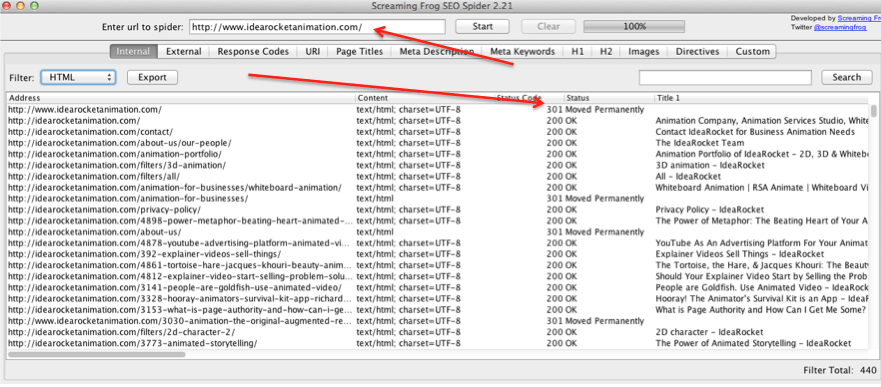

ScreamingFrog – If the site is less than 5000 or so pages, screaming frog will crawl the website in 1 minute tops. Here we’ll go the the Internal tab or Page Title tab to get a closer look at all of the titles along with meta descriptions. If we’re noticing a pattern of many pages utilizing the same primary keyword phrases or no optimizing is being taken advantage of, we’ll take note of.

ScreamingFrog – If the site is less than 5000 or so pages, screaming frog will crawl the website in 1 minute tops. Here we’ll go the the Internal tab or Page Title tab to get a closer look at all of the titles along with meta descriptions. If we’re noticing a pattern of many pages utilizing the same primary keyword phrases or no optimizing is being taken advantage of, we’ll take note of.

One other note when performing the crawl. Noticed the www. redirects to the non www version of the website. This is important to double check as on occasion websites will have both versions indexed by Google. People who link to your www version may not link to the non www version. This split can lead to a loss in link equity being transferred.

Check Duplicate Titles & Meta Descriptions – utilizing the two methods above, we’ll check for which keywords we’re attempting to improve rankings on and compare. Key is to reduce keyword cannibalizing and ensuring each page is optimized for the main keyword. This does not mean you should attempt to create a separate landing page for every single keyword you want to rank for, but if high level topical keywords are in the title and not peppered across many other titles, you’re in good shape.

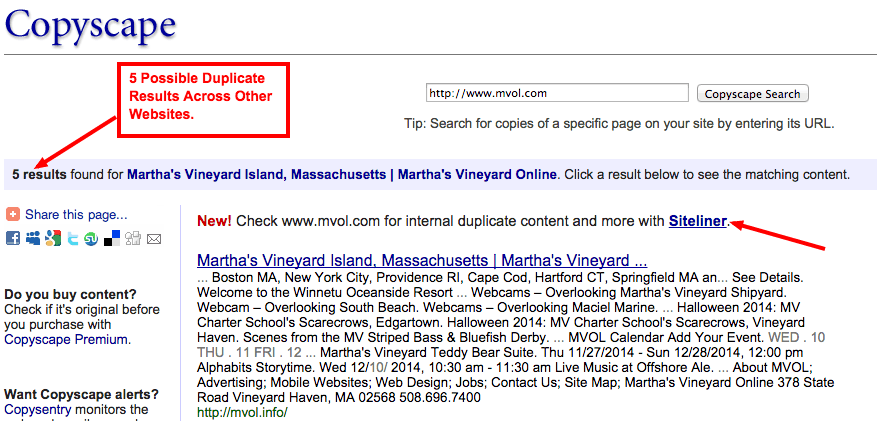

Check Scraped Content (On Site & Off Site) – we’ll use the free version of Copyscape to perform this search for scraped and duplicate content on your website and across the web. If you want to check the amount of duplicate content on the website, we’ll use Siteliner which tells use how many pages utilize similar text across many pages of the website.

Image Alt Text – How many images does the website currently employ and how many are missing alt text in the images? This is vital information for further optimizing the pages on the website, adding more relevancy, and possibly collecting additional search traffic via image searches.

H1 – 3 Tags – Another place to look at for adding more to key pages on a website. Here, we check to see if the target keywords are being used properly in the h1 – h3 tags. If they aren’t we’ll make note of it in our proposal or brief conversation we have with the client. We can pull this information from screaming frog or by going through the key pages of the website and inspecting the html code of the site.

Content Home – Finally, does the content on the home page look sufficient enough. Could more information or blocks of contact that could improve search traffic and user experience be added?

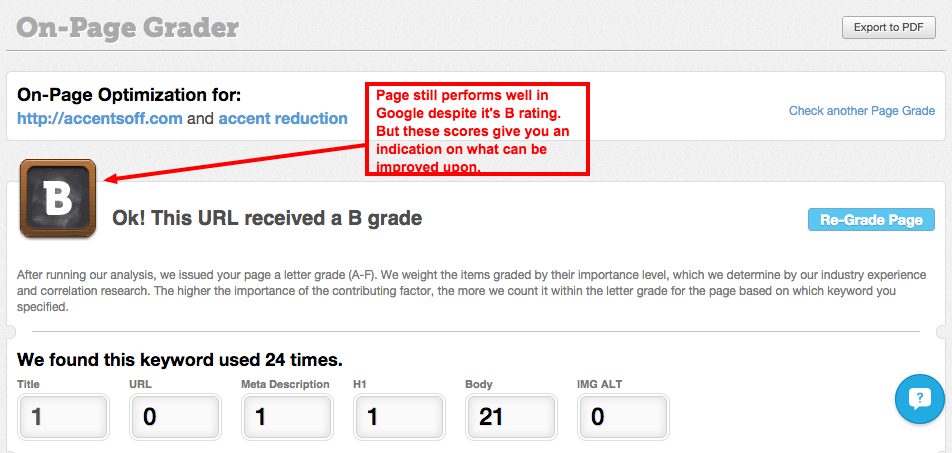

Moz Page Grader – As simple as this tool is, it is powerful because it helps us keep things in check if we’re truly looking to optimize a webpage for specific keywords. The Moz Page Grader will allow you to enter your website and target keyword to determine how well the page is optimized for that particular keyword. Now, I’ve seen pages receive a C and D score, but still rank fairly high for that keyword. Reasons could be due to anchor text, use of content and latent semantic keywords in the content of the page, and even use of keyword in h2 – 4 tags. But nonetheless, we’re looking to see what areas can be improved upon and where the page stands.

Technical SEO Audit

When you performed your screaming frog crawl, there’s a lot more information you extracted in addition to on-page factors. Majority of our quick analysis comes from screaming frog, but we’ll also delve into Google Webmaster Tools to pull the rest. GWT is not a required “for a quick analysis,” but can be very useful.

Clean URL Strings – These are not the best utilized url strings: http://www.snuza.com/content.php?product=go. You want to ensure the url strings are not long but are keyword and topic rich to what the page is truly about. Still happens often with ecommerce websites and old CMS platforms. Keywords in the url still send relevancy signals and should be considered when reviewing a website.

Robots.txt File Check – Are there key url strings that were accidentally added to the file that could be blocking sections or key portions of the website? Maybe specific sections should be added to the file because we simply don’t want Google or other search bots to crawl the website. If so, it’s important this be reported. To find out about the file, simply go to your browser and type www.website.com/robots.txt. The file itself will tell you what url strings and directives are in the file.

Page Speed – Where does your current page score stand? Do you need to reduce the file sizes of images? Is javascript all over the place? Are you leveraging browser caching…particularly for static pages that don’t need to be repopulated? The guys over at authority hacker has a nice case study on how they used page speed to improve search and user experience. Simply head over to https://developers.google.com/speed/pagespeed/insights/, type in your url and you’ll get a sense of where you stand pagespeed wise.

You can also check pagespeed by going into Google Analytics, Behavior, and Site Speed tab of the account you’re analyzing. Again, this is a quick audit so if you don’t have Google Analytics access, you can simply check these metrics via Google’s Pagespeed Scoring System.

Multiple Homepages – We run into this problem fairly often. www.example.com, example.com, example.com/html, example.com/index.shtml, and www.example.com/index.php are all different homepage urls. Most you can 301 redirect the proper homepage you want indexed by google, while some you can’t. This can be a problem but also an opportunity if people link to or share other variations of your website versus the one with the most link equity.

301’s & 404’s, and their cousins – Are you dealing with a large number of 404 page errors? We’ll check for this information via screaming frog, Moz, and Google Webmaster Tools if we’re granted access. It’s also important to check 500 errors for certain pages that time out or that showcase server errors. Also 302 redirect which are temporary pass no link equity from the old url to the newly intended redirection page. If these are unintended they should be reviewed and taken note of.

Existing Penalties (Manual/Algorithmic) – Finally, does the website have some sort of penalty? We’ll perform a site: search for the website in Google to ensure the site is indexed. We’ll also check the Google Analytics traffic for any major drop offs. We’ll utilize Google Panguin tool and overlay matches Google updates with those drops in traffics to further confirm our findings. Finally, we’ll check Google Webmaster Tools to confirm no penalty has been added to the website.

Backlink Profile Audit

Low Quality/Spammy Links/Irrelevant Links – If you have many or a high proportion of low quality spammy links pointing to a website, you need to know so they can be removed or disavowed. You can use any backlink analysis tool, but for us we still use MajesticSEO, even though AHREF’s has proven to be a better and more updated tool for backlink analysis.

Anchor Text Distribution – Is the website too optimized with keywords? Are the deeper 2nd level pages too optimized for keywords? If so, we’ll make note of this to the prospect or in the proposal we submit. This part of the audit is not meant to improve the search presence of the website but more so to prevent or future harm to the website.

Sitewide Links – Based on the link profile, does the website have many backlinks from a few website? This can occur when someone mentions or links to your website in the footer or sidebar of a website. Thus, for every post and page, your backlink will show up. If this is done too many times, it can pose a problem and expose your website to potential manual penalties.

Page/Domain Authority – Simple metric….what is the domain and page authority of the website? And how does this compare to a few of your primary search competitors (not simply competitors in real life). This is a moz.com metric you can find by going to www.opensiteexporer.org and plugging in the url in question.

Putting It All Together

Based on your quick SEO audit, you should have a pretty decent sense of where the website stands and where improvements can be made. This quick audit can be the foundation to the more in depth SEO audit one will perform on a website when you’re scrubbing through the Google Webmaster Tools and Google Analytics data.